Development on iCaption 2.0 stopped over a month ago, winding down to the final phases before the end of the release cycle. While this version is huge, with many great new features, there are some wish list items which just couldn’t make the cut.

The most notable and sought-after feature, by myself and from customers, is waveform visualization, which happens to be the next natural step after implementing the new timeline view. Waveform visualization didn’t make the 2.0 cut simply because it’s difficult; the development scope could have easily doubled the timeline feature scope size, were it to be in this release.

There are multiple points of difficulty with waveform visualization and left unaddressed the application, frankly, will feel like shit, which will in turn soil the user experience rather than enrich it. I’ve seen an audio application make me wait for what felt like a minute, to analyze a 4 minute song – an algorithm like that would never fly if you’re trying to subtitle a 120 minute long movie.

Algorithmically there’s analyzing each frame of a video’s audio track in a timely manner, keeping the application responsive while operating on the former (which may include background loading or streaming while also keeping the video available to the user) and waveform data cache management. The background thread is tricky because a QTMovie must detach from the main thread in order to become accessible from another thread, but that would cause the video to be unavailable to the user, whom will be interacting via the main thread. There’s also coming up with a reasonably fast approach to drawing tens or hundreds of thousands of audio samples on screen at any time without the user noticing pegs in the CPU (and it can’t simply use a pre-render for each draw because the waveform should squish/stretch and cull based on the current view of the timeline).

On the API side, as far as I can tell the examples I’ve found for audio frequency level extraction which Apple provided seem to have no 64-bit support, forcing me to drop down and compile iCaption as 32-bit-only. This should in theory still work fine in the 64-bit Mountain Lion, but it’s unsettling at best to make your project have to “go there”. There may be some newer API but if it exists, I’m sure I’d have to drop Snow Leopard or even Lion support, as I’m seeing a trend for favouring Mountain Lion specific APIs. Worse case I would try to make one final Snow Leopard compatible release of iCaption before switching to the 10.8 SDK.

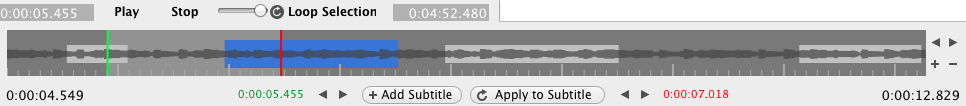

In ferocious spite of the above, I have already made enough serious headway with iCaption 2.1.0’s WIP to leave you all with a bit of a teaser.

Version 2.1.0 will be done when it’s done; don’t hold your breath.